How To Install Apache Spark On Windows 7

Introduction

Apache Spark is an open up-source framework that processes large volumes of stream data from multiple sources. Spark is used in distributed computing with machine learning applications, data analytics, and graph-parallel processing.

This guide volition show you how to install Apache Spark on Windows 10 and exam the installation.

Prerequisites

- A organisation running Windows 10

- A user account with administrator privileges (required to install software, modify file permissions, and modify system PATH)

- Command Prompt or Powershell

- A tool to extract .tar files, such as seven-Cipher

Install Apache Spark on Windows

Installing Apache Spark on Windows ten may seem complicated to novice users, but this uncomplicated tutorial will have you up and running. If y'all already have Coffee 8 and Python 3 installed, you can skip the first two steps.

Pace ane: Install Java viii

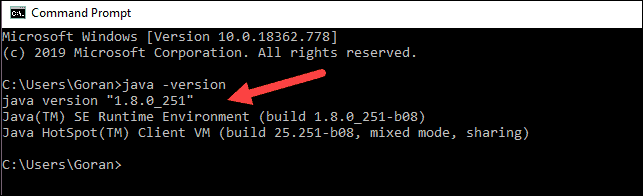

Apache Spark requires Java 8. Y'all can check to run across if Java is installed using the command prompt.

Open up the control line past clicking Start > type cmd > click Command Prompt.

Type the following command in the command prompt:

java -version If Java is installed, it will respond with the following output:

Your version may be different. The second digit is the Coffee version – in this example, Coffee 8.

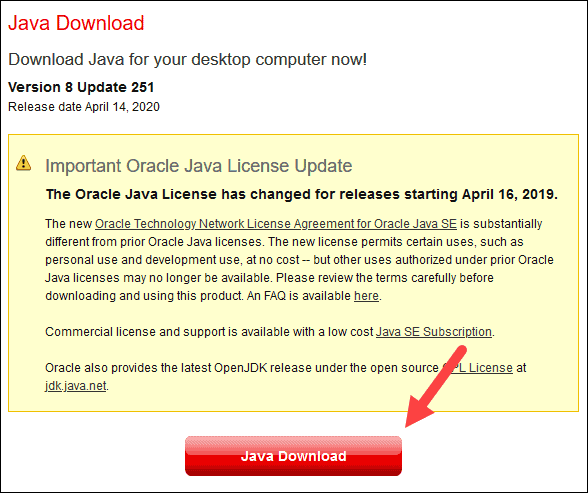

If you don't accept Java installed:

1. Open up a browser window, and navigate to https://java.com/en/download/.

2. Click the Java Download button and save the file to a location of your choice.

3. Once the download finishes double-click the file to install Coffee.

Notation: At the time this commodity was written, the latest Java version is i.8.0_251. Installing a later on version will still work. This process only needs the Coffee Runtime Environment (JRE) – the full Development Kit (JDK) is not required. The download link to JDK is https://world wide web.oracle.com/java/technologies/javase-downloads.html.

Stride 2: Install Python

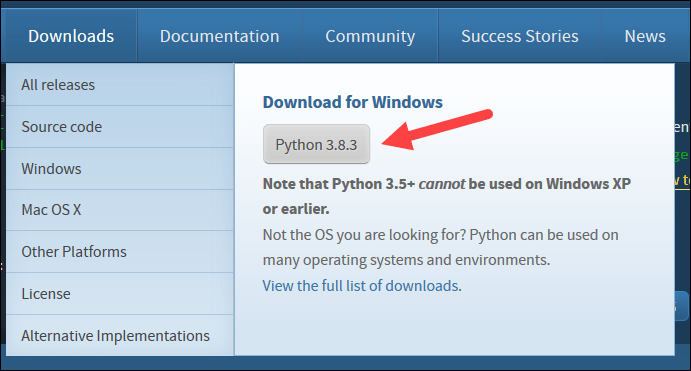

1. To install the Python bundle manager, navigate to https://world wide web.python.org/ in your web browser.

2. Mouse over the Download carte du jour selection and click Python 3.eight.three. iii.8.3 is the latest version at the time of writing the article.

iii. Once the download finishes, run the file.

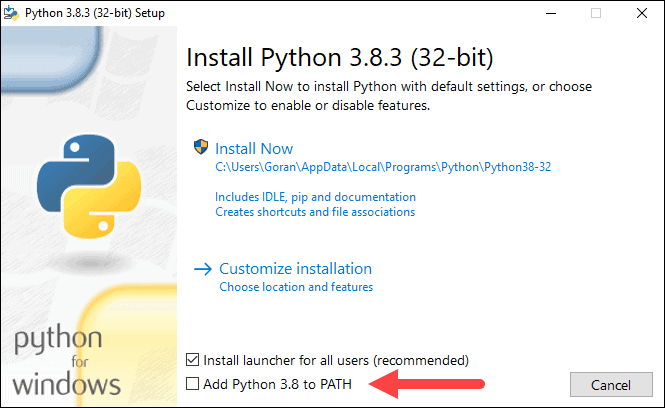

4. Near the lesser of the first setup dialog box, check off Add Python 3.8 to PATH. Get out the other box checked.

v. Next, click Customize installation.

6. You can leave all boxes checked at this pace, or you can uncheck the options yous do non desire.

7. Click Next.

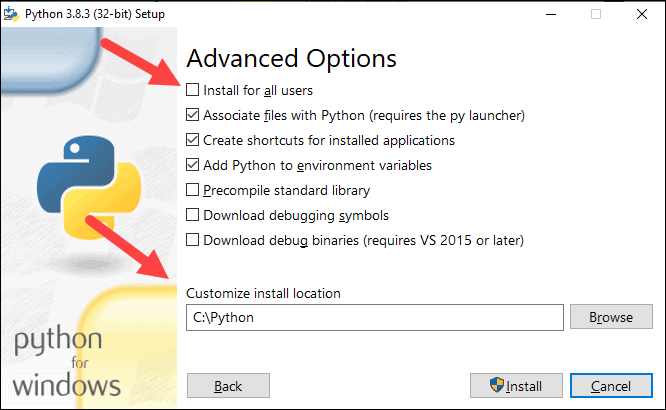

8. Select the box Install for all users and leave other boxes as they are.

9. Under Customize install location, click Browse and navigate to the C bulldoze. Add a new folder and name it Python.

x. Select that binder and click OK.

11. Click Install, and let the installation complete.

12. When the installation completes, click the Disable path length limit option at the lesser then click Shut.

thirteen. If yous have a command prompt open, restart it. Verify the installation past checking the version of Python:

python --version The output should print Python three.8.3 .

Note: For detailed instructions on how to install Python iii on Windows or how to troubleshoot potential bug, refer to our Install Python three on Windows guide.

Step 3: Download Apache Spark

1. Open a browser and navigate to https://spark.apache.org/downloads.html.

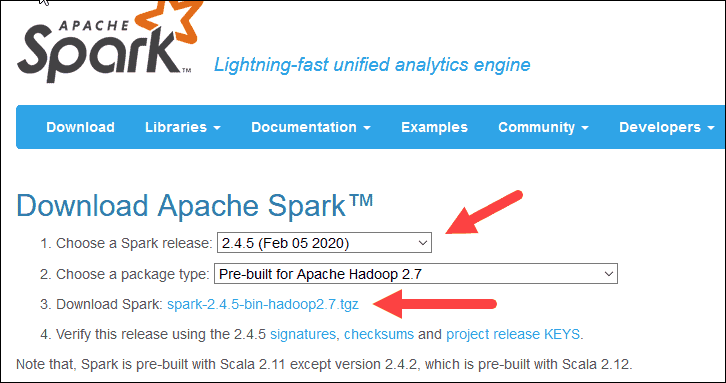

2. Under the Download Apache Spark heading, there are two drib-downwards menus. Utilize the current non-preview version.

- In our case, in Cull a Spark release drib-down carte du jour select ii.4.5 (Feb 05 2022).

- In the 2d driblet-down Cull a package type, exit the choice Pre-built for Apache Hadoop 2.7.

iii. Click the spark-ii.4.v-bin-hadoop2.7.tgz link.

iv. A page with a list of mirrors loads where yous can see unlike servers to download from. Pick any from the listing and salvage the file to your Downloads binder.

Step 4: Verify Spark Software File

ane. Verify the integrity of your download by checking the checksum of the file. This ensures y'all are working with unaltered, uncorrupted software.

2. Navigate back to the Spark Download folio and open the Checksum link, preferably in a new tab.

three. Next, open up a command line and enter the post-obit command:

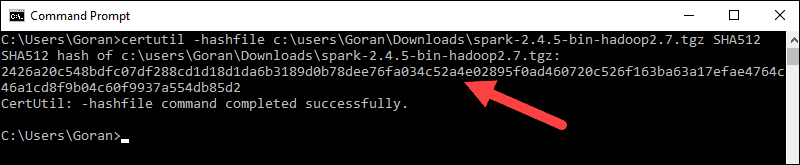

certutil -hashfile c:\users\username\Downloads\spark-ii.iv.five-bin-hadoop2.seven.tgz SHA512 four. Change the username to your username. The organization displays a long alphanumeric code, along with the bulletin Certutil: -hashfile completed successfully .

5. Compare the code to the one you opened in a new browser tab. If they match, your download file is uncorrupted.

Pace 5: Install Apache Spark

Installing Apache Spark involves extracting the downloaded file to the desired location.

1. Create a new binder named Spark in the root of your C: drive. From a command line, enter the post-obit:

cd \ mkdir Spark 2. In Explorer, locate the Spark file y'all downloaded.

3. Right-click the file and extract it to C:\Spark using the tool you have on your system (e.thousand., 7-Zip).

4. Now, your C:\Spark folder has a new folder spark-2.four.5-bin-hadoop2.7 with the necessary files inside.

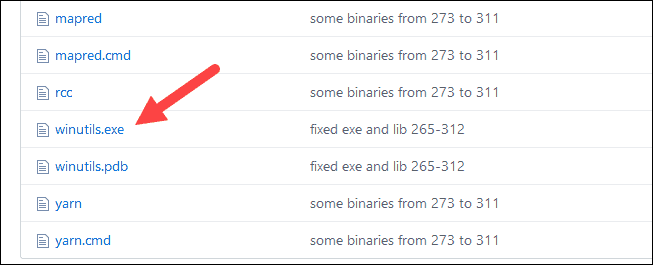

Stride 6: Add winutils.exe File

Download the winutils.exe file for the underlying Hadoop version for the Spark installation you downloaded.

1. Navigate to this URL https://github.com/cdarlint/winutils and within the bin folder, locate winutils.exe, and click it.

2. Notice the Download button on the right side to download the file.

3. Now, create new folders Hadoop and bin on C: using Windows Explorer or the Command Prompt.

4. Re-create the winutils.exe file from the Downloads folder to C:\hadoop\bin.

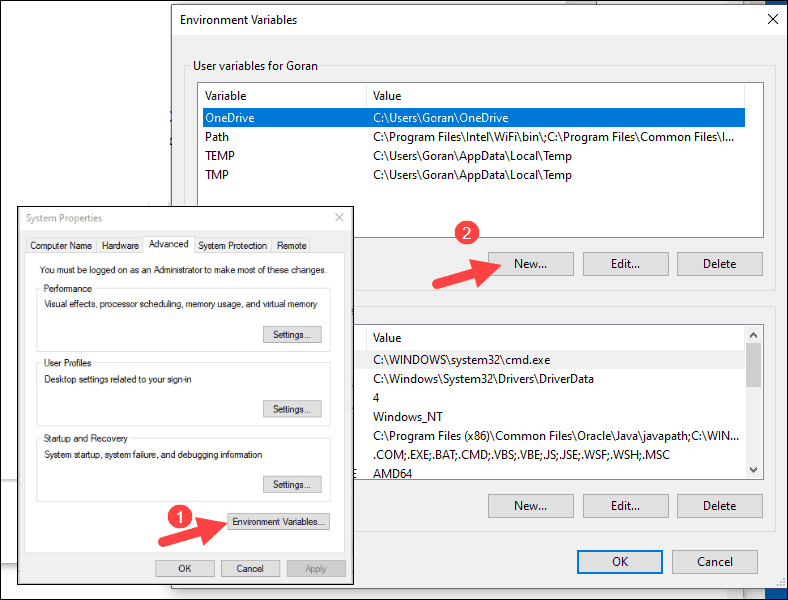

Step vii: Configure Environment Variables

Configuring surround variables in Windows adds the Spark and Hadoop locations to your arrangement PATH. Information technology allows yous to run the Spark shell directly from a command prompt window.

1. Click Start and type environment.

2. Select the issue labeled Edit the system environment variables .

3. A Organisation Properties dialog box appears. In the lower-correct corner, click Environment Variables and then click New in the side by side window.

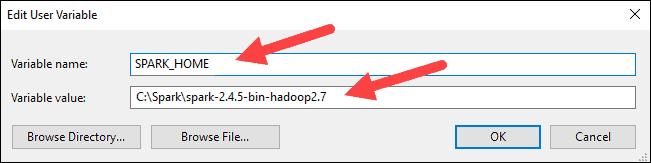

4. For Variable Name type SPARK_HOME .

5. For Variable Value blazon C:\Spark\spark-ii.4.v-bin-hadoop2.vii and click OK. If y'all inverse the folder path, use that 1 instead.

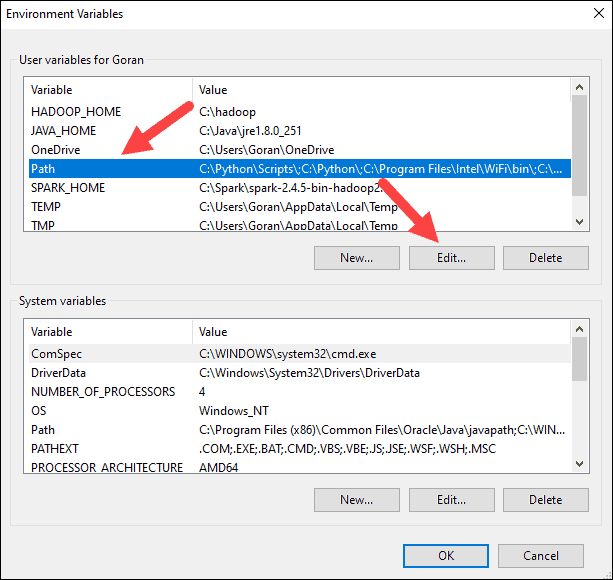

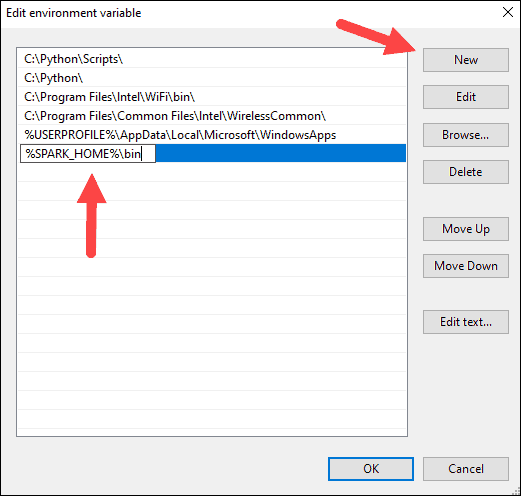

6. In the pinnacle box, click the Path entry, and then click Edit. Be careful with editing the system path. Avoid deleting whatever entries already on the list.

vii. Y'all should see a box with entries on the left. On the right, click New.

8. The organization highlights a new line. Enter the path to the Spark folder C:\Spark\spark-2.4.v-bin-hadoop2.seven\bin. Nosotros recommend using %SPARK_HOME%\bin to avoid possible issues with the path.

9. Echo this process for Hadoop and Java.

- For Hadoop, the variable name is HADOOP_HOME and for the value use the path of the folder you created before: C:\hadoop. Add together C:\hadoop\bin to the Path variable field, but we recommend using %HADOOP_HOME%\bin.

- For Java, the variable proper name is JAVA_HOME and for the value use the path to your Java JDK directory (in our instance it's C:\Program Files\Java\jdk1.viii.0_251).

10. Click OK to close all open windows.

Note: Star by restarting the Command Prompt to apply changes. If that doesn't work, yous will need to reboot the system.

Footstep viii: Launch Spark

1. Open a new control-prompt window using the right-click and Run every bit ambassador:

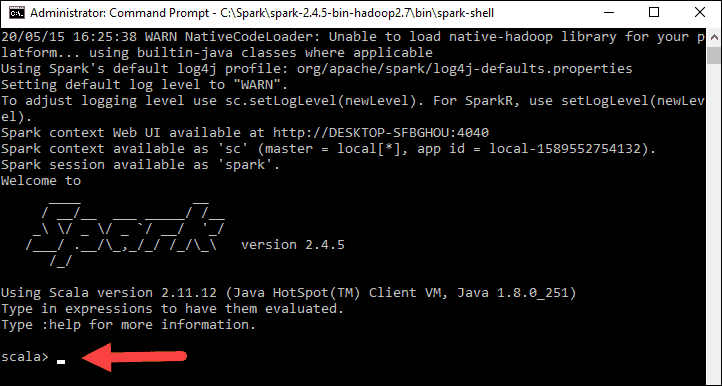

2. To start Spark, enter:

C:\Spark\spark-2.4.5-bin-hadoop2.7\bin\spark-crush If you lot set the environment path correctly, you can blazon spark-shell to launch Spark.

iii. The organization should display several lines indicating the status of the application. Yous may get a Java pop-upward. Select Allow access to go along.

Finally, the Spark logo appears, and the prompt displays the Scala beat out.

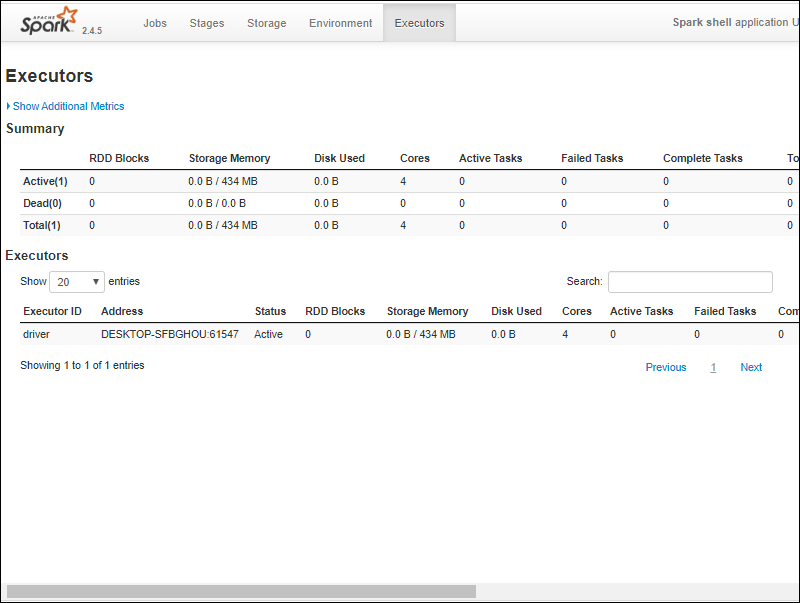

four., Open a web browser and navigate to http://localhost:4040/.

v. Yous can replace localhost with the name of your system.

half dozen. You should run across an Apache Spark shell Web UI. The case below shows the Executors page.

7. To leave Spark and close the Scala trounce, press ctrl-d in the command-prompt window.

Annotation: If y'all installed Python, yous can run Spark using Python with this command:

pyspark

Exit using quit().

Examination Spark

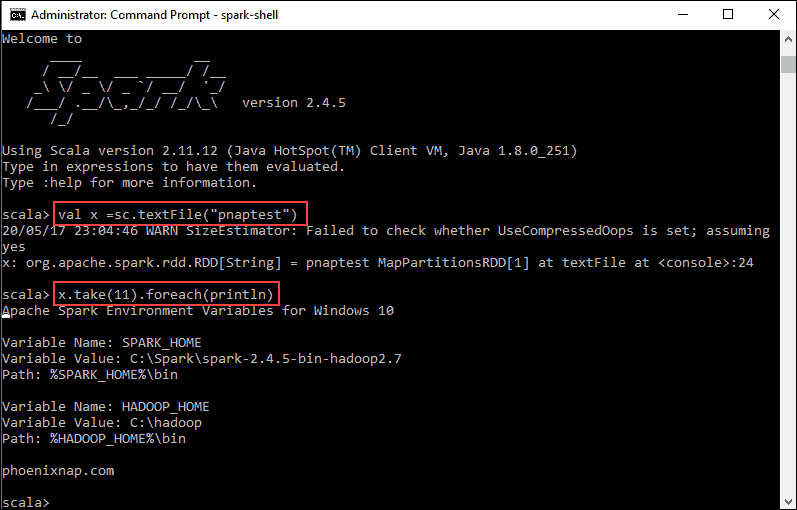

In this example, we will launch the Spark shell and utilise Scala to read the contents of a file. Yous tin utilize an existing file, such as the README file in the Spark directory, or you tin can create your ain. We created pnaptest with some text.

ane. Open a command-prompt window and navigate to the folder with the file yous want to employ and launch the Spark shell.

two. First, state a variable to use in the Spark context with the name of the file. Remember to add together the file extension if at that place is any.

val x =sc.textFile("pnaptest") iii. The output shows an RDD is created. Then, we can view the file contents by using this command to phone call an action:

x.take(eleven).foreach(println)

This control instructs Spark to impress 11 lines from the file you specified. To perform an action on this file (value ten), add another value y, and practise a map transformation.

4. For instance, you tin print the characters in reverse with this control:

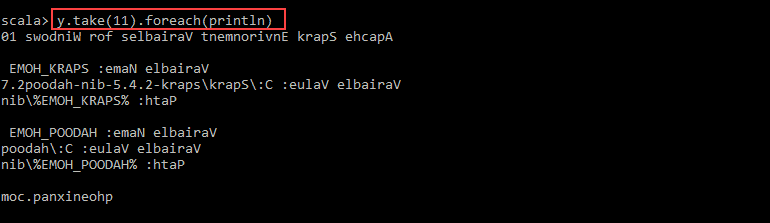

val y = x.map(_.reverse) 5. The system creates a kid RDD in relation to the get-go 1. Then, specify how many lines you desire to print from the value y:

y.accept(11).foreach(println)

The output prints eleven lines of the pnaptest file in the reverse club.

When done, leave the beat out using ctrl-d .

Conclusion

Y'all should at present have a working installation of Apache Spark on Windows 10 with all dependencies installed. Get started running an instance of Spark in your Windows surround.

Our suggestion is to also learn more than about what Spark DataFrame is, the features, and how to use Spark DataFrame when collecting information.

Was this article helpful?

Yes No

Source: https://phoenixnap.com/kb/install-spark-on-windows-10

Posted by: ellisaffel1999.blogspot.com

0 Response to "How To Install Apache Spark On Windows 7"

Post a Comment